AI is not only models and data - it is also heat. A lot of heat. As server compute density scales up, the demand for efficient, reliable, and scalable cooling grows in lockstep. Conventional fan-based cooling stops being sufficient once the TDP of a single GPU exceeds 700 W and a full rack pushes toward 120 kW. In this article, we outline what AI server cooling looks like in practice today, when it makes sense to switch to liquid cooling, what immersion delivers, how to design an AI data center for thermal distribution, and how ML-driven HVAC automation is changing infrastructure management.

AI server cooling at 1000 W TDP - why fans are no longer enough

Just a few years ago, standard front-to-back airflow with passive heat sinks was adequate for most rack servers. Today, with a single processor dissipating 700–1000 W and an 8×GPU server taking a rack beyond 120 kW, the situation is fundamentally different. Cooling an AI server at these power densities requires a different approach - not only at the device level, but across the entire data center. Higher static-pressure fans, air shrouds, and hot/cold aisle containment are no longer sufficient with tightly packed deep learning configurations.

The primary challenge is maintaining stable GPU junction temperatures without thermal throttling, which in AI workloads directly translates to longer inference times and lower energy efficiency. As a result, more organizations adopt hybrid server cooling topologies that combine conventional air with liquid loops. This approach not only stabilizes component temperatures but also reduces HVAC energy costs by 30–40%, which, at scale, means tens of thousands of PLN annually. In practice - if your AI infrastructure is meant for 24/7 duty - you cannot rely on front-bezel airflow alone. Treat cooling as a first-class, strategic component of the AI environment.

Liquid-cooled server - when does direct-to-chip make more sense than airflow?

Direct-to-chip systems are now preferred wherever conventional air cooling reaches its limits. Merely adding more fans or increasing air volume leads to sharp rises in energy use and noise while still failing to deliver optimal GPU/CPU core temperatures. A liquid-cooled server - specifically direct liquid cooling (DLC) - transfers heat directly from component hot spots. A cold plate interfaces with the processor, and the coolant circulates in a closed loop with a heat exchanger.

Notably, more platforms - such as Dell PowerEdge XE9680 and Supermicro 421GE-TNHR - now ship factory-prepared for liquid cooling. You do not need to rebuild the entire AI data center to gain DLC benefits - ready-made kits with mounting plates, manifolds, and coolant loops can be deployed. The result? Junction temperatures around 55–60°C at full H100 load, no throttling, reduced fan duty, and lower total system power draw. If efficiency and density matter - and in AI they do - a server cooled by liquid is no longer experimental; it is a practical alternative to traditional air cooling.

Immersion cooling in practice - what submersion looks like in AI environments

At the high end, immersion solutions outperform other methods on efficiency. In this approach, the entire server - GPUs, CPU, motherboard, and power supply - is submerged in a special dielectric fluid that extracts heat directly from every component, eliminating fans. For AI, that is a major advantage: hotspot bottlenecks, airflow constraints, and uneven heat distribution effectively disappear.

In tests by vendors such as Submer and GRC, immersion cooling cuts HVAC energy consumption by 40–60%, with Power Usage Effectiveness (PUE) dropping to as low as 1.03. A further benefit is heat reuse - for example, space heating or domestic hot water - which is particularly valuable for organizations focused on carbon footprint. Immersion is not a universal fit, however. It requires a shift in infrastructure thinking, integration with energy recovery systems, and properly selected immersion tanks. But if your AI data center targets 100 kW+ per rack, it is hard to find a more economical investment today.

AI data center under high load - how to plan rack density and thermal distribution?

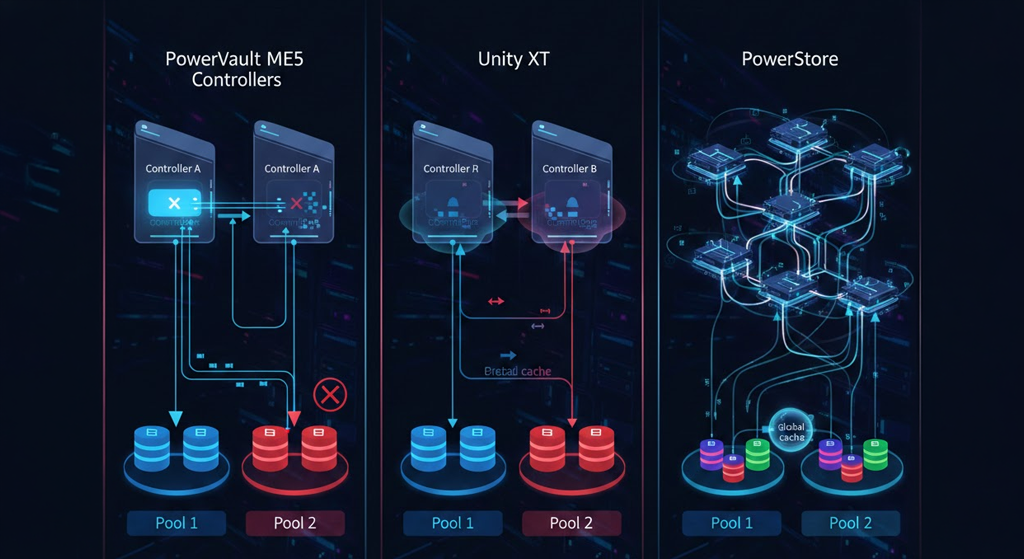

AI data center planning differs from conventional server environments. You cannot simply “add more CRACs.” You must analyze thermal load distribution across time and space, account for GPU workload profiles, model training sequences, and power-draw transients. With TDPs exceeding 700–800 W per accelerator - and 8–10 accelerators in a single cabinet - you have to engineer cooling not only at the server level, but across the rack and row.

Modern designs therefore incorporate dedicated liquid cooling zones, dynamic valves, adaptive flow controllers, and delta-T sensors at the U level. A well-planned AI data center is about more than PUE - it is about operational stability and scalable growth without site migration. Dell, Lenovo, and Supermicro already provide solutions built for such environments, including liquid manifolds, pump redundancy, heat exchangers, and real-time coolant telemetry. If you are starting a project with AI in mind, do not treat cooling as an add-on - plan it on par with compute capacity.

AI-driven server cooling - automation, sensors, and ML in HVAC

Cooling automation is the next step toward higher efficiency - especially when the system must react dynamically to fluctuating loads. By integrating temperature, coolant flow, pressure, and power sensors with ML systems, AI server cooling can be controlled adaptively - down to individual GPU granularity. Companies like Schneider Electric, Vertiv, and Rittal deploy ML-enabled HVAC that predicts where and when load peaks will occur and pre-activates flows or reprioritizes sections of the rack accordingly.

This is not just convenience - it is real savings. Dynamic cooling can cut power consumption by 10–20%, extend fan life, reduce coolant usage, and optimize pump operation. Add BMS and DCIM integration, and you can manage the entire environment from a single console. If you care about long-term TCO and stability, think of cooling not just as physics - but as a subsystem managed by software. In AI, server cooling today also means code, data, and prediction - and more and more organizations understand that.