Training large-scale AI models is far beyond the capabilities of ordinary desktops. If you plan to run LLMs, process big data, or design custom deep learning solutions, you need to know which AI server can actually handle the workload. The choice isn’t just “pick something with an RTX”—the entire platform matters: GPU, CPU, RAM, storage, cooling, and scalability.

AI Servers - which GPUs do you really need for deep learning?

Choosing a GPU for AI workloads is no longer as simple as saying “get an RTX card.” For large model training, generative neural networks, or LLMs, performance depends not only on the number of CUDA cores, but also on VRAM capacity, memory bandwidth, tensor core support, and compatibility with AI frameworks. In production deployments, standard choices include NVIDIA H100, A100, L40s, and A800, available in systems such as Dell PowerEdge XE9680, Lenovo SR670 V2, or Supermicro AS-4125GS-TNRT.

If you don’t need that much power but want to train smaller models or fine‑tune existing architectures—for example in R&D environments—options like RTX A6000, 4090, or even 4070 Ti Super remain cost-efficient while still delivering strong performance. When selecting a GPU, don’t rely on gaming benchmarks; more important factors include VRAM capacity (at least 24 GB for serious projects), support for mixed precision (FP16, BF16), and efficiency at large batch sizes. And crucially, AI servers should support GPU expansion, preferably in a modular design or with full PCIe Gen5 compatibility.

Deep learning servers - RAM, storage, and cooling requirements

It’s easy to focus solely on GPUs, but without carefully balanced RAM, CPU, storage, and cooling, the GPUs will be bottlenecked. In professional AI environments, 128 GB RAM is the minimum, while enterprise servers frequently run 512 GB, 1 TB, or even 4 TB—especially when handling multiple models or complex preprocessing pipelines. Crucially, enterprise environments require ECC memory, since memory errors during long training runs can be catastrophic.

When it comes to storage, NVMe is mandatory. SATA SSD cannot sustain the throughput needed for fast I/O with massive datasets, particularly when multiple GPUs operate in parallel. Servers like Lenovo SR670 V2 or Dell PowerEdge XE9680 offer support for multiple GPUs with fast NVMe Gen4 SSDs, while newer editions already support Gen5. On top of that, thermal management is critical: 4 or 8 H100s emit enormous heat, which demands either liquid‑cooling solutions (e.g. Supermicro) or robust airflow systems with redundant PSUs of at least 2 kW. Without this, production deployment will be unstable.

CPUs for AI servers - preventing data pipeline bottlenecks

Contrary to popular belief, CPUs in AI servers are not just “for system overhead.” In large machine learning pipelines, preprocessing, decoding, augmentation, and I/O can outpace the actual training if the CPU lags behind. A proper machine learning server requires a processor that won’t become a bottleneck. Currently, the two leading architectures are Intel Xeon Scalable (Gen 5) and AMD EPYC 9004 “Genoa.” Both offer dozens to over 90 physical cores per socket, DDR5 memory support, abundant PCIe Gen5 lanes, and strong multi-thread efficiency.

In practice—if you intend to train multiple models simultaneously or need real‑time data pipeline processing—look for 32–64 physical cores at 3 GHz or higher. Models such as Supermicro AS-4125GS-TNRT or Dell PowerEdge R760xa provide high flexibility in CPU-GPU-RAM configurations. For smaller budgets under ~30,000 PLN or testing environments, AMD Threadripper PRO 7000 or Intel Xeon W-3400 can handle 2–3 high-end GPUs and multiple VMs without issue. A well-balanced CPU won’t directly accelerate model training, but will significantly shorten the entire ML cycle.

Beyond H100 - alternative GPUs worth considering

It’s common to see H100/A100 as the face of AI computing, since they dominate HPC clusters and handle the largest-scale models. But not every project requires such hardware. For startups or mid-sized organizations, less marketed cards often provide excellent cost-efficiency. Examples include NVIDIA RTX A6000, L40s, A800, or 4090—capable of training transformer architectures or language models up to several billion parameters in workstation or rackmount setups.

Other alternatives include AMD Instinct MI300, increasingly popular in open-source ecosystems where CUDA compatibility is not critical. Certain systems even combine different GPU classes within one chassis—for instance, Dell PowerEdge T640—ideal for test‑dev deployments mixing RTX 4070 Ti with A6000 to evaluate model performance across configurations. Not every AI implementation requires H100s, and in many cases, two or three mid-range cards bring faster time‑to‑market without multimillion investments.

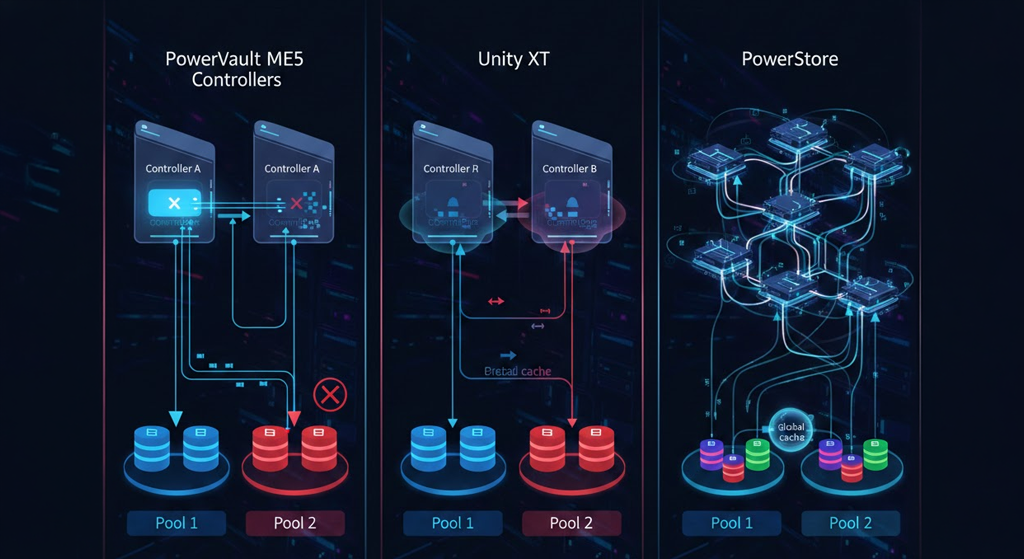

Flexible AI servers - tower vs. rack vs. blade

Not every AI project demands a complete datacenter with liquid cooling. For early-stage setups or R&D departments, a properly configured tower can be sufficient—for example, Dell T640 with dual Xeon Gold, 512 GB RAM, and multiple RTX A6000 slots offers robust performance while fitting under a desk. Here, form factor plays a key role: you don’t always need a full rack system, particularly for smaller datasets and short iteration cycles.

On the other hand—if you plan for scaling, multi‑GPU infrastructure, or cluster/hybrid integration, modular rack and blade platforms make more sense. For instance, Dell PowerEdge FX2s (with FC640/FC830 nodes) enables easy expansions, resource reallocation, and improved power and networking management. Flexible AI server design lets you match infrastructure to scenario needs, which is often more impactful than a raw GPU benchmark.

Cloud vs. on-premise AI servers - when does it pay off?

The cloud vs. physical hardware decision isn’t ideological—it’s about time, scale, and cost predictability. Cloud platforms bring clear advantages: no upfront CAPEX, on‑demand scaling, and quick configuration testing without sunk costs. If you’re building an MVP, running models occasionally, or lack infrastructure, cloud providers like Azure, AWS, or OVH are optimal. However, at scale, costs escalate quickly.

For continuous 24/7 inference or large model training, cloud services can be several times more expensive than an owned deep learning server. Compliance, GDPR, and data security also favor on‑premise infrastructure. With predictable workloads and an in‑house team, a customized, expandable AI server can pay itself off within months. In 2025, an 8×H100 cloud configuration may cost tens of thousands of PLN per month—while physical hardware amortizes within 4–6 months. Thus, the decision must fit real operational scenarios, not just Excel projections.