AWS outage of 20 October 2025 – analysis of this year's biggest cloud infrastructure disaster and conclusions for IT architecture

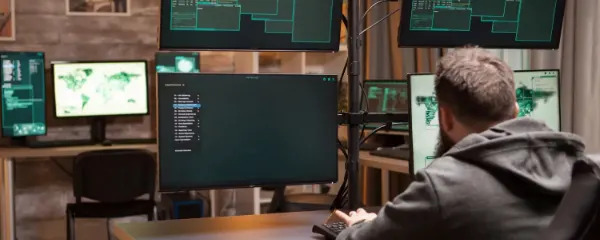

October 20, 2025 – the world was reminded just how much of the Internet rests on a single pillar: the US-EAST-1 region of Amazon Web Services. From the early morning hours Eastern Time (approx. 03:11 ET), AWS reported elevated errors and latencies across many services, with the root cause identified as DNS resolution failures for DynamoDB API endpoints in the N. Virginia region. After several hours, AWS announced "full mitigation" of the DNS issue, but secondary disruptions (particularly in EC2 instance launches and Network Load Balancer health checks) extended the incident's impact into the afternoon hours.

The scale of impact was total: services from giants like Snapchat, Reddit, Zoom, Signal, Fortnite, Venmo were unavailable or severely limited, and periodically even Amazon.com, Prime Video, Alexa, and Ring experienced issues. At peak, millions of reports flooded DownDetector, and analysts spoke of damages running into the billions.

How did one of the largest outages in public cloud history unfold? Below we present the technical timeline of events, root cause analysis, a review of analogous failures in public clouds, and - most importantly - practical architectural takeaways from the perspective of infrastructure and network engineers.

What Exactly Happened – Technical Post-Mortem

1) Ignition Point: DNS → DynamoDB in US-EAST-1

AWS identified "elevated errors" in DynamoDB stemming from a DNS resolution issue to API endpoints in the N. Virginia region. When DNS cannot translate a name to an IP address, applications treat the service as unavailable even though the servers themselves may be healthy—this is the classic "fail-open on DNS" scenario that results in observable unavailability across the dependency chain.

2) Dependency Cascade in Control and Data Plane

Broken connectivity to DynamoDB affected a range of dependent services (IAM updates, Global Tables, Lambda components, CloudTrail), and in parallel the EC2 subsystem responsible for launching instances and Network Load Balancer health checks were degraded. This hampered reactive auto-scaling of applications and prolonged recovery. AWS deliberately throttled certain operations (e.g., EC2 launches, SQS→Lambda mappings) to avoid overload while "untangling" the backlog of pending requests.

3) Timeline and Recovery

AWS applied initial mitigations after approximately 2 hours, reporting "significant signs of recovery," and at 02:24 PDT (11:24 CET) announced full mitigation of the DNS error. Full return of services to normal operation was confirmed at 15:01 PT (00:01 CET the following day), though some services ("Config," "Redshift," "Connect") were still clearing message backlogs.

4) Why Did a DNS Failure in One Region Infect Services Everywhere?

The US-EAST-1 region is historically the largest and oldest AWS region, serving as the "nerve center" for many globally-scoped control-plane functions. An error in the naming layer (DNS) for a critical component (DynamoDB API) therefore impacts not only local workloads but also global management paths, explaining the ripple effect on services in other regions.

Has This Happened Before? Review of Similar Cloud Outages

- AWS, US‑EAST‑1 – 2017/2021/2023/2025: The Virginia region has been the "Achilles' heel" of the global ecosystem due to service concentration and control-plane dependencies; this year's outage reaffirmed that pattern.

- Akamai, 2021 – DNS/edge: An error at the DNS/CDN provider triggered global disruptions in major services—a related dynamic of single control point, massive blast radius.

- Microsoft Azure – DNS and Configuration Incidents: Azure has historically recorded outages linked to DNS and BGP, underscoring that no hyperscaler is immune to control-layer errors.

- CrowdStrike, 2024 (different nature): Though not an IaaS cloud, the global "single-update" showed how centralization and supply chain dependencies can destabilize the economy.

Conclusion: Today's public cloud delivers exceptional availability, but when incidents occur - they have enormous blast radius due to traffic concentration and dependencies. Experts have coined a specific term for this dangerous situation: concentration risk.

Wniosek: Dzisiejsza chmura publiczna zapewnia rewelacyjną dostępność, ale incydenty – gdy już do nich dochodzi - mają ogromny promień rażenia z racji koncentracji ruchu i zależności. Eksperci stworzyli z resztą na to osobtny termin określając tę niebezpieczną sytuację mianem concentration risk.

Will Such Outages Become More Frequent?

The pace of workload growth (AI/LLM, intensive edge, explosion of new data centers in Data Center Alley) combined with operational change complexity increases the attack surface for configuration errors and control-plane bottlenecks. This doesn't mean an avalanche of weekly outages, but the risk of "rare but large" events persists, and some analysts indicate that with rapid AI/accelerator scaling it may grow. Therefore, the proper response is resilience engineering on the client side (not just relying on provider SLAs).

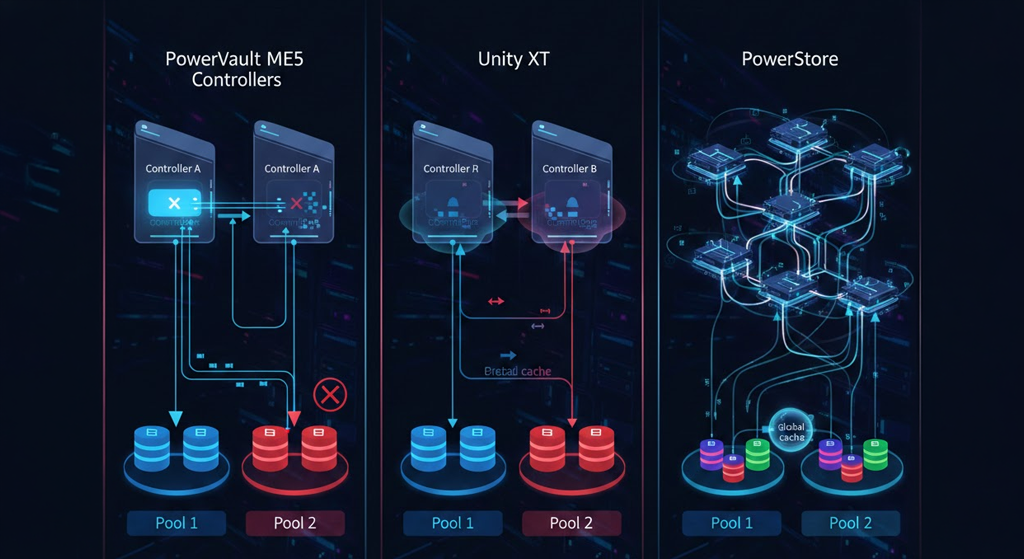

What Could Have Limited the Impact: Resilience Patterns

- Multi-Region with Distributed Independence

Simply spreading across AZs in US-EAST-1 is insufficient when the dependency involves control-plane or global services tied to that region. A second primary region is needed with an independent set of data/secrets resources, separate CI/CD paths, and routable DNS/traffic-manager failover at the organization level. - Warm Standby / Active-Active Across Clouds (Multi-Cloud) – Where Justified

For critical flows (authorization, shopping cart, payments, key APIs), an active second track in another cloud or - ideally - on-prem can be justified to cut concentration risk. Costs and complexity rise - but so does the value of RTO (Recovery Time Objective) and RPO (Recovery Point Objective). - Resilient Client Patterns: Exponential Backoff, Circuit Breakers, DNS Caching with Short TTL and "Fail-Closed"

Many secondary impacts resulted from retry cascades and congestion. Well-designed limits, error budgets, and backpressure contain the wave of secondary failures.

On-Premise Infrastructure as Part of Resilience (and Cost) Strategy: When Does It Make Sense?

In our analysis, we don't propose "fleeing the cloud." We propose a conscious mix: critical components and stable, predictable workloads can be maintained in owned infrastructure - as a second track or first choice - reducing risk concentration and TCO over a 3-5 year horizon.

Key Advantages of Well-Designed On-Prem:

Scalability Up and Down – On Your Terms

In the cloud, scaling "up" is trivial—scaling "down" is often difficult due to commitments, reservations, and operational fees. On-prem allows flexible "right-sizing" of clusters (e.g., power-save, node hibernation, elastic GPU/CPU pools) without penalty costs for de-provisioning. This is critical for seasonality (retail, media, events) and for ML workloads post-model training. (Mechanically: HCI, vSphere/KVM, power profile automation, bare-metal pool).

Security and Data Sovereignty

For PII, medical, financial, or IP data, local control of the trust domain (own KMS/HSM, network segmentation, no shared management plane) simplifies compliance and trust chain inspection - without implicit region/control-plane dependencies. The AWS incident demonstrated that global control-plane can be a common dependency across many services.

Lower Total Cost (TCO) for Predictable Workloads

With stable, high, and predictable usage (e.g., ERP, DWH with nightly cycles, render farms, edge inference), capital cost + depreciation + energy + support often beat continuous subscription for managed services and transfer. Additionally, we eliminate "taxes" for egress and certain managed components.

Operational Comfort and Predictability

"Ownership" of control-plane eliminates a certain class of risks: central provider outages don't halt logistics, CI/CD, or autoscaling mechanisms - because there are no cross-cloud dependencies in critical paths. The October 20 incident starkly illustrated that this approach is resilient to such failures, making an entity with owned infrastructure one of the few that continuously delivers its value and services when others face problems. And - without any ability to influence the situation - they wait for the provider to fix the outage.

Cloud Integration on Your Terms

Nothing prevents hybridization: burst to cloud for peaks, S3-compatible objects on-prem as origin of truth, cloud as DR (or vice versa). The key is traffic design (Anycast/DNS/GLB) and data asymmetry (e.g., read-heavy offload to CDN/cloud, write-primary locally).

It's important to note that on-prem requires operational discipline (monitoring, capacity planning, hardware lifecycle). In return, it provides cost predictability and reduction of concentration risk, which just materialized in AWS.

How Hardware Direct Can Help

If you are considering incorporating owned infrastructure into your resilience strategy (or modernizing it), the Hardware Direct team designs, delivers, and deploys physical server architecture. We're happy to prepare a reference architecture and TCO model for your specific workload profile - to combine cloud convenience with the control and resilience of your own environment.