Already trained the model? Now it’s time to move it into production - fast, stable, and without bottlenecks. This is where AI inference comes in: the phase in which your model processes live data and returns results in real time. To make this effective, you need the right hardware: an inference server capable of sustaining heavy loads, with a GPU and CPU optimized not for training but for low-latency, high-throughput inference at scale.

AI inference in practice – what really impacts latency and scalability?

When deploying AI models in production environments, response time becomes more critical than the model’s accuracy itself. In the AI inference stage - drawing predictions from an already trained model - latency is measured in milliseconds, and every delay matters. For workloads such as chatbots, real-time analytics, IoT, video monitoring, or recommendation engines, the key factors are latency, throughput, and scalability. It’s less about how precise the model is and more about whether it can handle 10,000 concurrent requests without delay.

Several elements directly influence performance:

-

GPU inference throughput

-

Low latency from RAM and NVMe drives

-

Effective thermal management

-

Parallel query handling efficiency

-

Support for INT8 and FP16 precision formats

Modern deployment workflows leverage tools like Triton Inference Server, which dynamically manages request queues and aligns workload distribution with available GPU resources. A well-optimized inference server can process hundreds of thousands of daily requests while maintaining low latency and reduced power consumption. This is why “production inference” has now evolved into a dedicated specialization - it’s no longer just about running the model, but about building a robust operational architecture.

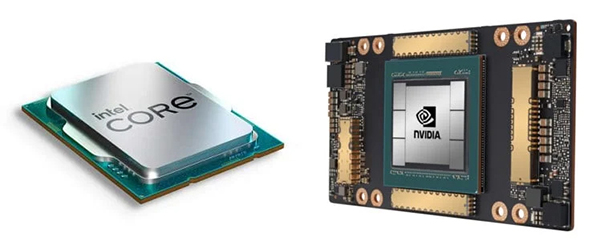

GPU inference without bottlenecks – choosing the right accelerator for QPS

Selecting a GPU for inference is more complex than simply checking VRAM size. For most production models (LLMs, vision networks, classifiers, recommendation systems), raw performance is secondary to how the GPU handles low-precision computations (FP16, INT8), its bandwidth architecture, and whether it can accommodate multiple concurrent streams. Inference GPUs must avoid being locked down by a single large model when serving a high QPS (queries per second) environment.

Commonly deployed inference GPUs include NVIDIA A2, A10, L40s, and Jetson Orin for edge AI - all offering excellent performance-to-cost ratios, supported by frameworks such as TensorRT, Triton, and Red Hat AI Inference Server, and deployable without requiring specialized chassis or extreme cooling setups.

Example: Dell PowerEdge R760xa with A10 can handle up to 3× more requests than older T4-based solutions, all while reducing energy consumption and providing more stable performance under load. Importantly, GPU planning should consider not just compute power, but rack-scale availability and scalability. If you anticipate scaling from a single card today to four in six months, ensure that the motherboard, power supply, and cooling system are prepared for expansion.

Inference servers for LLMs – which CPU and how much RAM makes sense today?

For language models and generative AI, where concurrency and response time are critical, the CPU is no longer “just” a system orchestrator - it takes an active role in inference. Large transformer models (e.g., BERT, GPT, T5) generate significant CPU workloads through preprocessing, input/output handling, query batching, and context processing - especially in real-time inference scenarios. For LLM workloads, inference servers should start with at least 32 physical cores, with 64–96 cores preferred in enterprise deployments.

Memory requirements are just as important. To avoid slowdowns, 128–256 GB ECC DDR5 RAM has become the baseline - particularly in multi-model or containerized production environments.

Recommended systems include Supermicro SYS-421GE-TNHR, Lenovo ThinkSystem SR670 V2, and Dell PowerEdge R760xa, which provide balanced CPU-GPU configurations, scalability, PCIe Gen5 and NVMe Gen4 support, and full out-of-band management (iDRAC/IMM/IPMI). CPU and RAM selection is never incidental - these determine how effectively the GPU sustains high-load inference.

Edge AI servers – compact, efficient, real-time for IoT and monitoring

Not all inference infrastructure runs in datacenters. Increasingly, inference is deployed in factories, retail points, hospitals, transportation, and surveillance - environments where data must be processed instantly and locally. Here, edge AI servers must be compact, quiet, energy-efficient, and robust against variable conditions, while still delivering real-time inference.

Solutions such as NVIDIA Jetson AGX Orin, Lenovo SE350, and Dell PowerEdge XR4000 are designed for this scenario. They integrate GPU inference accelerators, use fanless or enclosed active cooling, and typically consume under 250 W per unit. Many also support container management frameworks (K3s, Docker) and can work offline, synchronizing data only upon connection. These edge AI servers are no longer experiments - they are practical alternatives to mini-datacenters, especially for production lines, border control, vehicles, or CCTV networks.

High-performance and cost-efficient – inference servers without a million-dollar entry point

Contrary to popular belief, deploying inference infrastructure doesn’t always require “six-figure invoices.” With predictable workloads and QPS estimates, it’s possible to build scalable, production-ready inference servers at a fraction of enterprise costs.

Examples: Dell PowerEdge R660xs with A10 or A2, Lenovo SR, Dell T560 with RTX 4070 Ti Super and 256 GB RAM (tower). Each of these systems has been validated for AI inference workloads without requiring over-engineered datacenter hardware. The key is not overpaying for features you won’t use - if you don’t need 8× GPUs, skip 3U servers with redundant power modules and instead focus on serviceable, upgradeable platforms. Adding software stacks like Triton, OpenVINO, or ONNX Runtime ensures maximum utilization. In practice, a properly optimized single-A10 inference server may outperform a misconfigured dual-L40s setup. AI inference is ultimately about balance - power, budget, efficiency, and manageability.

Does every company need a dedicated inference node? When hybrid makes more sense

Not all businesses require a dedicated inference infrastructure. For many organizations, a hybrid model - combining local inference servers with cloud or edge nodes - is more cost-effective and scalable. This setup works especially well for variable workloads, project-based deployments, or scenarios where some inference nodes run 24/7 while others only spin up temporarily (e.g., seasonal traffic, marketing campaigns).

One possible architecture: a local inference node in the office (e.g., Dell T560 with A10), paired with an edge deployment in the field (Jetson Orin or Lenovo SE350), all connected to a central cloud API. This approach ensures flexibility, maintains data control, and avoids overspending. The inference server does not always need to handle everything - modular architectures, split by task types, locations, and SLA tiers, provide better resilience and cost-efficiency.

In 2025, AI inference is no longer just about raw performance - it is about designing a strategic, production-ready operational framework.